DEEP FAKE BIRDSONG

“If you want to find the secrets of the universe, think in terms of energy, frequency and vibration.”

The difference between digital and analog electronics is significant. Digital relies on binary patterns made with electrical pulses, also known as “bits” or 1s and 0s. Bits are used to form complex code sequences, analogous to strands of DNA. Digital is a linguistic technology because electricity is used to encode binary sequences that have defined meaning. By contrast, analog circuits work with continuous waveforms. Analog electronic devices do not read or write pre-defined sequences of code: their physical design and constitution shapes electricity into complex patterns of vibration. To use a metaphor, the resonant sound quality of a drum is analog, whereas the drum beat is digital.

Analog electronics can be life-like with drastically fewer components than a digital solution. In this regard, Heaton's work has much in common with the Tortoises of William Grey Walter’s Machina speculatrix (1948) and BEAM robotics (Mark W. Tilden et al., 1991). Analog electronic oscillation is simply vibration with amplitude and frequency, and this simplicity can be very powerful. To quote a well-known electrical engineer:

Heaton’s “Breadbird #1” combines five adjustible oscillators with one modified Hartley oscillator to generate birdlike sounds based on how the circuit vibrates in response to a voltage source — no code or audio recording, just electromechanical vibration. Obviously, five adjustable oscillators do not offer infinite complexity, but the design principle is made evident: an analog system of coupled oscillators is a powerful way to generate signal complexity thanks to its continuous, nonlinear physical properties. The schematic for Heaton’s “Breadbird #1,” 2019 is shown below.

Electricity, vibration, and the building blocks of consciousness

“It is unlikely that the number of perceptible functional elements in the human brain is anything like the total number of nerve cells; it is more likely to be on the order of 1,000. But even if it were only 10, this number of elements could provide enough variety for a lifetime of experience for all the men who ever lived or will be born if mankind survived a thousand million years.”

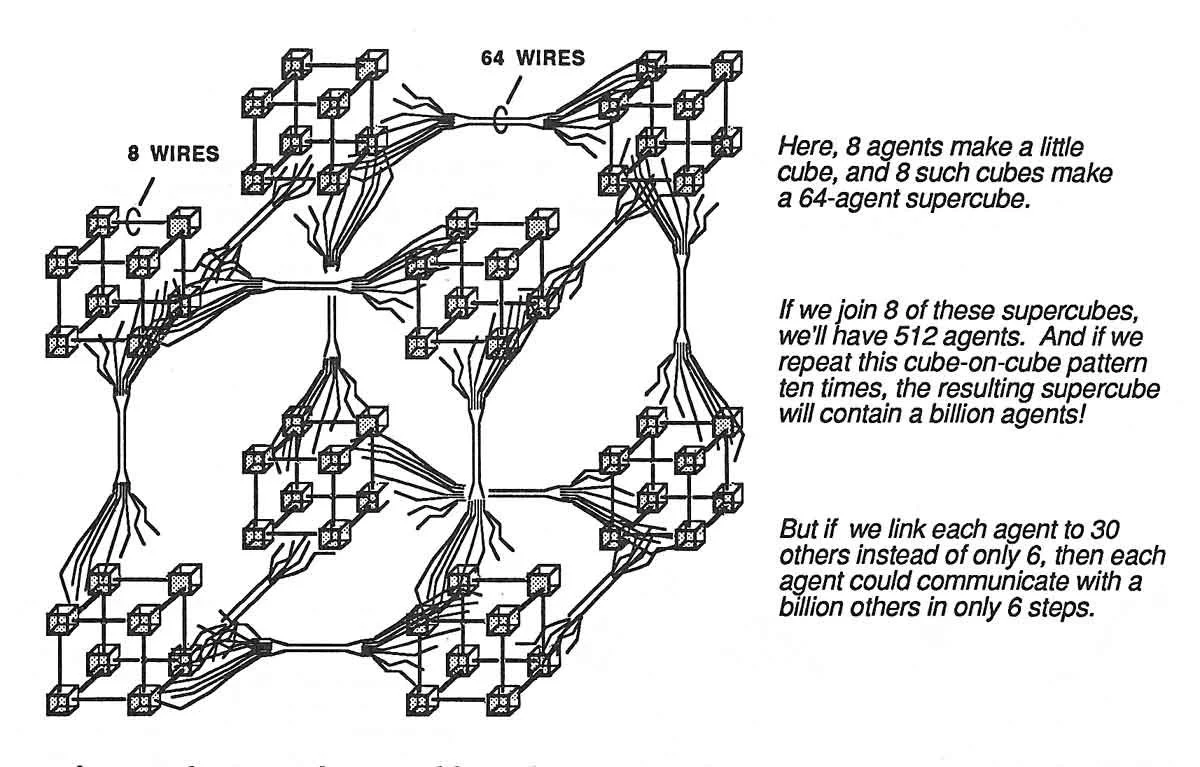

Imagine the complexity generated by billions of coupled oscillators, as is the case with the human brain. The magnitude and nonlinearity of scale is impossible to fathom. Could this be the mystery of consciousness? There is no ghost in the machine because the machine is an analog electronic ghost maker: a vastly superior oscillating circuit to Heaton’s electronic songbirds.

“He wanted to prove that rich connections between a small number of brain cells could give rise to very complex behaviors - essentially that the secret of how the brain worked lay in how it was wired up.”

Schematic for Kelly Heaton’s analog electronic birdsong generating sculpture used in the Deep Fake Birdsong experiment, 2019-2020

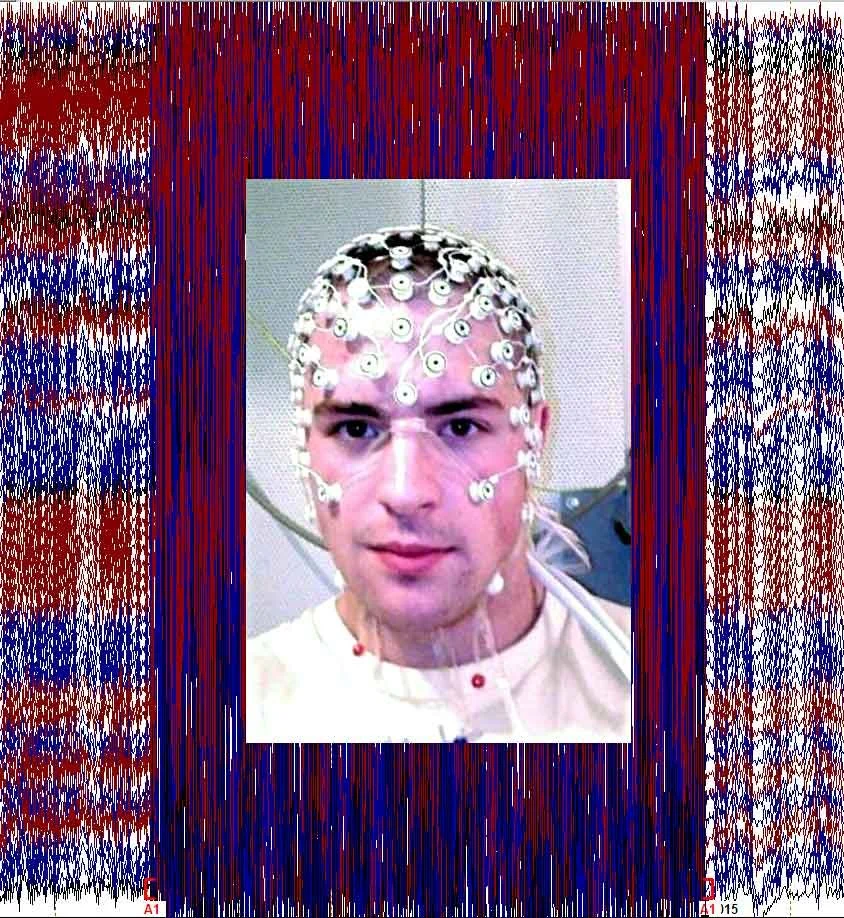

Electrical activity in the hippocampus of a mouse, recorded by Dr. Garrett Stuber

THE DEEP FAKE BIRDSONG EXPERIMENT, 2020

“Deep Fake Birdsong unites art with science to inquire about the electrical nature of lifeforms and to push the envelope of artificial intelligence. ”

WHAT KIND OF BIRD IS THIS CIRCUIT?

In Fall 2019, Kelly Heaton invited artist and engineer Johann Diedrick to conduct experiment: what does one form of artificial intelligence think about another form? We used software for birdsong identification (Diedrick’s Flights of Fancy, 2019) to examine a circuit for birdsong generation (Heaton’s Breadbird, 2019).

What would Diedrick’s bird identification software say about Heaton’s circuit that sings like a bird? Would the software think it is a real bird; and, if so, which species? Correlation with a real bird would be coincidental because Heaton made no effort to engineer a specific species, only generic bird-like sounds. Therefore, in the spirit of electronic Dadaism, we asked Diedrick’s digital software to “identify her analog circuit for us.”

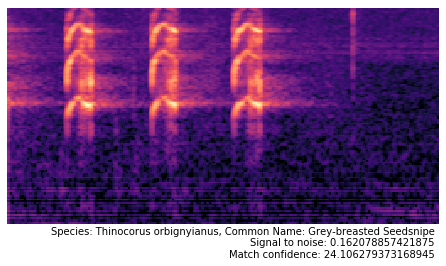

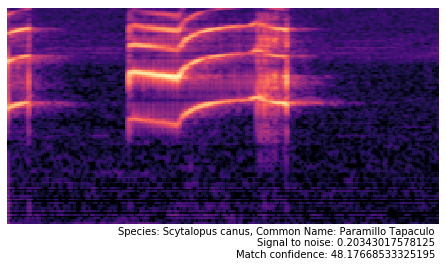

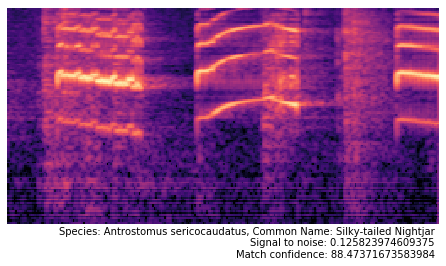

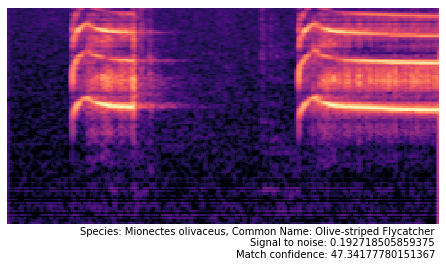

Based on a 2:02 minute audio recording of Heaton’s singing circuit, Diedrick generated 122 spectrograms that we ran through his Flights of Fancy model (trained against the BirdCLEF dataset). Out of 1,500 possibilities, Diedrick’s algorithm identified only four possible bird species with “match confidence” ratings from 19% to 96%. The four species of identified birds are as follows:

Grey-breasted Seedsnipe: 16 matches with an average percent confidence of 26.515

*Silky-tailed Nightjar: 47 matches with an average percent confidence of 93.147

Paramillo Tapaculo: 54 matches an average percent confidence of 48.991

Olive-striped Flycatcher: 5 matches with an average percent confidence of 47.938

*Notably, 47 of the 122 spectrograms were matched with the Silky-tailed nightjar (Antrostomus sericocaudatus) with an average percent confidence of 93%. This is remarkably high fidelity for half of the analyzed spectrograms! Heaton asked an ornithologist friend for her opinion of the experimental results, and she said that Nightjars are pre-passarine birds with a relatively simple song structure, similar to the circuit used for Deep Fake Birdsong. To honor these delightful insights, Heaton christened her first electronic songbird product “Nightjar,” available through Adafruit starting in 2023.

technical details of Diedrick’s ai software system

The possibility of both detecting and identifying birds in field recordings has been a long-standing problem in the field of bioacoustics and acoustic scene recognition [1, 2]. Only in recent years has it been possible to do large scale bird detection and recognition across large datasets of recordings, facilitated through the use of convolutional neural networks (CNNs) and other deep learning techniques [3]. Through the use of deep neural networks, we can scan through recordings, identify where bird calls are happening, and further, report back on what bird species produced that call. In Diedrick’s work Flights of Fancy, he was able to train a CNN through transfer learning to recognize birds found in the BirdCLEF dataset, which contains over 36,000 recordings across 1,500 species of birds primarily from South America [4. 5]. With this trained model, it is possible to take new recordings from birds that the model has never seen before, pass it through the software system, and produce a prediction as to what bird species the system thinks is contained within the recording (based on bird species from the dataset).

The Flights of Fancy software system was developed as follows: first, the BirdCLEF 2018 dataset was downloaded and sorted in such a way as to organize all of the audio recordings into folders named for each species. This way, we can use the folder name as the label for training, with all the recordings for a species contained within its species-named folder. Next, a data pre-processing step went through all of the recordings and segmented out bird calls through a signal-to-noise heuristic. This determination identifies signals with a significant amount of upward/downward variation as to be called a chirp. The system saves these segments as spectrograms images (frequency representation of a signal) in a folder named after each species like before in order to be used for training. Finally, the system uses a technique known as transfer learning [6] to take a specific kind of neural network (ResNet) already pre-trained on images (ImageNet), and leverages its pretrained weights to train more effectively on our generated spectrogram images of bird calls. From there, our model was trained down to a 27% error rate on predicting the species of a bird called based on spectrograms of bird species from that specific dataset.

References: [1] V. Lostanlen, J. Salamon, A. Farnsworth, S. Kelling, J.P. Bello. "BirdVox-full-night: a dataset and benchmark for avian flight call detection", Proc. IEEE ICASSP, 2018. [2] J. Salamon, J. P. Bello, A. Farnsworth, M. Robbins, S. Keen, H. Klinck, and S. Kelling. Towards the Automatic Classification of Avian Flight Calls for Bioacoustic Monitoring. PLoS One, 2016. [3] https://arxiv.org/pdf/1804.07177.pdf [4] https://github.com/aquietlife/flightsoffancy/blob/master/flightsoffancy.ipynb [5] https://www.aicrowd.com/challenges/lifeclef-2018-bird-monophone [6] https://docs.fast.ai/vision.learner.html#Transfer-learning

“The original question, ‘Can machines think?’ I believe to be too meaningless to deserve discussion.”